Linux, an open-source implementation of UNIX, is

rapidly gaining acceptance among iSeries users. With the V5R2 enhancements,

Linux for 64-bit PowerPC can run natively in as many as 31 separate logical

partitions (LPARs). Traditionally, many organizations use the iSeries server as

a reliable and highly scalable database server. The iSeries database, DB2 UDB

for iSeries, is fully integrated in OS/400 and exploits its robust features,

such as single-level storage, tight security, and object-based architecture. For

these reasons, we at IBM believe that DB2 UDB for iSeries will remain the

database of choice for most iSeries shops.

Generally, Linux applications

can use three programming environments to access the iSeries database: ODBC,

JDBC, and DB2 Connect gateway. This article provides implementation tips and a

number of coding examples that illustrate how to optimize the access to your DB2

UDB for iSeries database from a Linux partition by using the iSeries ODBC driver

for Linux.

ODBC has become the de facto standard application programming

interface for providing connectivity to heterogeneous databases. Its popularity

is based on its ability to access multiple vendor data sources from a single

application. ODBC developers can create applications without being concerned

about database-specific calls. The Rochester development lab provides the

iSeries ODBC Driver for Linux that is based on the iSeries ODBC driver for

Windows. The Linux ODBC driver uses the iSeries database host servers as the

access point to the system. It communicates with the back-end database server

job through a socket connection. The iSeries ODBC Driver for Linux is an ODBC

3.5 ANSI driver with the ability to store and process Unicode data. It is a

no-charge iSeries licensed program (5733-LO1) that you can

download.

Please note that the download site provides two separate install

images:

- ODBC Driver for Power PC

- ODBC Driver for Intel-based Linux workstations

You need to

download the PowerPC version if you plan to access iSeries from a Linux

partition.

Note that Linux commands and utilities shown in the article

have been executed using SuSE SLES 8 distribution. The command syntax and

location of directories may differ slightly for other Linux distributions.

Installation and Configuration

Apart from the iSeries

ODBC Driver for Linux, you need three other components in the Linux partition to

successfully compile and run ODBC applications in a Linux

partition:

unixODBC Driver Manager Version 2.0.11 or above--unixODBC is

an application and library (referred to as a driver manager) that knows about

available drivers, allows the definition of new data sources, and manages the

connections to the defined data sources. Luckily, the Linux for iSeries

distributions include precompiled, PowerPC versions of the unixODBC Driver

Manager. You can use the following command to verify the version currently

installed in your Linux partition:

C/C++ compiler--The distributions contain a very good open-source

GNU compiler. To check if it's installed on your system, run the following

command:

libodbc.so shared library--This library is required at the link

time. Make sure that the appropriate link exists in the /usr/lib directory.

Sometimes, the soft link is missing; consequently, the link phase of the compile

process will fail. You can easily fix this problem by manually creating the

missing symbolic link to an implementation of the shared library that has been

installed with the unixODBC driver manager. Here's an

example:

Although a Red Hat Package Manager (RPM) image of the iSeries ODBC Driver

for Linux may exist on your system, we strongly encourage you to download the

latest version of the driver from the URL shown earlier in this article. You

should have a copy of iSeriesODBC-5.1.0-0.14.ppc.rpm or later. This version

contains several important memory fixes that significantly reduce the driver's

memory footprint.

The driver can be installed with the RPM command.

Here's an example:

The driver will not successfully install if a previous version exists on

the system. In this case, you need to "boot out" the older version with the

following command:

Sometimes, removing an RPM isn't practical, particularly if other

programs on the system depend on it. In that case, re-install the driver using

the --force option:

The RPM command installs the driver into the /opt/ibm/iSeriesODBC

directory. You can quickly verify that the driver works properly by using the

CWBPING utility that is part of the driver installation:

Here, os400 is the TCP/IP host name of the OS/400 partition.

You could also use a physical IP address:

Make sure that the connection to the Data Access host server job was

successful. The driver install process registers the iSeriesODBC driver with the

driver manager by adding the driver info to the odbcinst.ini configuration file.

This file can be found in /etc/unixODBC directory (/etc with older versions of

the driver manager). Here's example content of the odbcinst.ini file:

Description = iSeries Access for Linux ODBC Driver

Driver = /opt/ibm/iSeriesODBC/lib/libcwbodbc.so

Setup = /opt/ibm/iSeriesODBC/lib/libcwbodbc.so

Threading = 2

The next step in the configuration process is to create a data source

name (DSN) entry for the iSeries database. You can accomplish this task either

by using the graphical tool ODBCConfig or by editing the odbc.ini configuration

file.

There are two types of DSN entries: system DSN and user DSN. The

system DSN is usually created by root and resides in the /etc/unixODBC

directory. Any user on the system can use a system DSN. A user DSN is scoped to

a particular user and cannot be shared with other users on the system. The

configuration file for a user DSN is called .odbc.ini and is located in the

user's home directory--for instance, /home/jarek/.odbc.ini.

Here's an

example entry for a system DSN added to the odbc.ini file:

Description = iSeries os400

Driver = iSeries Access ODBC Driver

System = os400

Here, os400 is the host name of the target iSeries

system.

Once the system DSN exists, you can use the isql utility (part of

the unixODBC driver manager installation) to obtain an ODBC connection to the

target DB2 UDB for iSeries database and execute SQL statements. The utility is a

bit "clunky," but it can be quite useful to run simple ad hoc SQL statements.

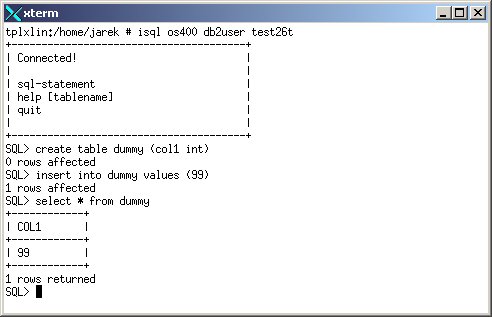

Figure 1 shows a simple isql

session:

Figure

1: Use an isql session to run SQL

statements. (Click images to enlarge.)

Type isql at the shell prompt to get some basic help

info about supported syntax and options.

Simple ODBC C++ Application

This section covers ODBC implementation details for a

sample C++ program called

testodbc.

To compile the source, use the following command in the Linux partition:

The application retrieves a number of rows from the QCUSTCDT table

located in QIWS library. The selection criterion is based on the current value

of the STATE parameter. Here's the SELECT statement used by testodbc:

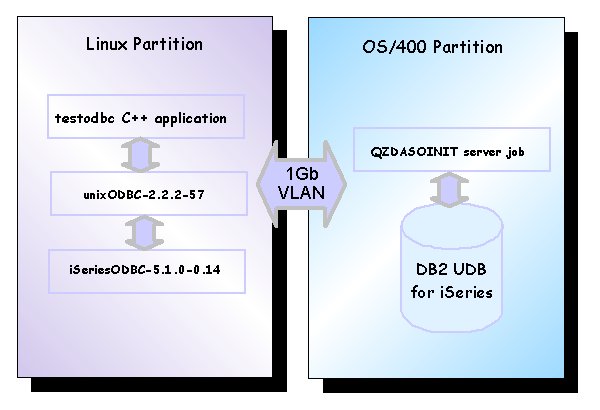

Figure 2 shows the software components

involved in the execution of this sample

application.

Figure

2: This is how an ODBC client accesses DB2 on

iSeries.

Although the testodbc application is fairly simple, it covers some important aspects of efficient ODBC programming for DB2 UDB for iSeries.

Addressing the Locale Issues

Let's start by examining the class constructor of

the testodbc program, as seen in Figure 3.

|

Figure 3: The class constructor for the sample

application.

At [1], the locale is set for the application.

This is important in cases where the Linux application uses the entire character

set for a given client code page. For instance, it may use a special character,

such as a tilde (~), as a keyword delimiter in a literal used in a WHERE clause.

The iSeries ODBC driver is using the locale set up by the application to convert

the SQL statements. If the application does not set up the locale in its class

constructor or main() function, the default compiler "C" locale will be used.

This means that a client code page of 367 will be used for some client

conversions. These conversions do not handle much outside of the a-z, 0-9

character range. As a result, an SQL statement may not be properly converted to

the database server code page. In this case, the DB2 runtime throws an SQL

–104 error (Token was not valid). By specifying the locale in the

application, you eliminate this risk.

Connection Keywords

The iSeries ODBC driver supports a number of

connection keywords that you can use to control the connection properties and to

improve the application's performance. The complete list of the connection

keywords can be found in the iSeries

Information Center.

There are several methods to specify the

connection attributes. For instance, you can modify the DSN entry in the

odbc.ini file. Figure 4 shows an example of a system DSN entry.

|

Figure 4: Here's an example of a system DSN

entry.

Alternatively, you can specify the connection attribute

keywords on the connection string in the application. Figure 5 shows a code

snippet illustrating this method.

|

Figure 5: Specify the connection attribute keywords on

the connection string.

CommitMode specifies the default

transaction isolation level. In this case, it is set to Read Uncommitted

(*CHG).

ExtendedDynamic specifies whether to use extended dynamic

(package) support. A value of 1 enables the packages. This is the default for

iSeries ODBC driver.

DefaultPkgLibrary specifies the library for

the SQL package.

DefaultPackage specifies how the extended

dynamic (package) support will behave.

The SQL packages are server-side

repositories for SQL statements. Packages contain the internal structures--such

as parse trees and access plans--needed to execute SQL statements. Because SQL

packages are a shared resource, the information built when a statement is

prepared is available to all the users of the package. This saves processing

time, especially in an environment where many users are using the same or

similar statements. Because SQL packages are permanent, this information is also

saved across job initiation/termination and across IPLs. In fact, SQL packages

can be saved and restored on other systems. Usually, there is no need to delete

SQL packages, but sometimes it is still reasonable to delete them, especially

when you make significant changes to the database design. You can use the WRKOBJ

command to locate and delete an SQL package. Here's the command to find the SQL

package for the testodbc application:

The actual package name is TESTODBCVBA and is generated by the system.

The SQL packages are named by taking the client application name and appending

three letters that are an encoded set of the package configuration

attributes.

Consult the FAQ

document for more details about the iSeries SQL package support.

Reusable Open Data Paths (ODPs)

An open data path (ODP)

definition is an internal OS/400 object that is created when certain SQL

statements (such as OPEN, INSERT, UPDATE, and DELETE) are executed for the first

time in a given job (or connection). An ODP provides a direct link to the data

so that I/O operations can occur. The process of creating a new ODP object is

fairly CPU- and IO-intensive, so whenever possible, the iSeries DB2 runtime

tries to reuse the existing ODPs. For instance, a SQLCloseCursor() API call may

close the SQL cursor but leave the ODP available to be used the next time the

cursor is opened. This can significantly reduce the processing and response time

in running SQL statements. A reusable OPD usually requires 10 to 20 times less

CPU resources than a newly created ODP. Therefore, it is important that the

applications employ programming techniques that allow the DB2 runtime to reuse

ODPs.

With dynamic interfaces, such as ODBC or JDBC, full opens are

avoided by using a "prepare once, execute many" programming paradigm. It means

that when an SQL statement is going to be executed more than once, you should

prepare the statement just once and then reuse the prepared statements for

consecutive executions. Although DB2 does try to convert literals to parameter

markers so that similar statements can be reused, it is a better programming

practice to explicitly implement parameter markers. That way, you can quite

significantly improve the chances for reusable ODPs. The code snippet in Figure

6 illustrates how to implement the "prepare once, execute many" programming

technique.

|

Figure 6: Use the "prepare once, execute many"

programming technique.

The error-handling code has been

removed for clarity. At [1], the statement text is assigned to a variable. Note

that a parameter marker is used in the WHERE clause. The statement is then

prepared just once at [2]. The current value of the parameter is bound to the

prepared statement at [3]. The prepared statement is executed at [4]. Steps [3]

and [4] are repeatedly executed in a for loop.

Techniques and Fixes to Further Improve ODBC Performance

I've discussed the techniques used by a sample application to tune the ODBC performance. However, depending on your application architecture, you may consider other methods of improving ODBC performance.

Connection Pooling

The unixODBC manager supports connection pooling,

which can potentially limit the number of connections required by your

application-accessing iSeries database. All depends on whether your application

processes running in a Linux partition can be shared/reused by multiple clients.

The unixODBC connection pooling implementation is scoped to a process. In other

words, the connection is reused if the calling process is the same as the

process that initiated it. So, if your application creates and drops connections

from a limited number of persistent processes, the connection pooling will

certainly improve the performance. Setting up the connection pooling is easy.

Just edit the odbcinst.ini so it looks as shown in Figure 7.

|

Figure 7: Set up connection pooling by editing the

odbcinst.ini.

The critical values are Pooling = Yes and

CPTimout. You may want to experiment with the latter value. In the

example, it was set to 10 minutes. The settings shown above worked fine with a

test program that opened and closed a connection several times. During the

program execution, there was one QZDASOINIT job on the iSeries because the

SQLDisconnect request was intercepted by the driver manager, and the connection

was actually not closed.

Note that the connection pooling implemented by

unixODBC is functionally similar to the connection pooling on Windows provided

by the MS ODBC manager.

Blocking, Stored Procedures, and Result Sets

Reduce the number of trips to the server with blocking, stored procedures, and result sets. See IBM's iSeries 400 PC Client/Server Programming Web site for iSeries-specific implementation details.

Fix for Apps Frequently Switching the AUTOCOMMIT Mode

Some ODBC applications frequently switch the AUTOCOMMIT mode on and off. For instance, when transactional work is done, the AUTOCOMMIT is switched off; then, right after the transaction is committed or rolled back, the application switches the AUTOCOMMIT back on. This processing flow may cause some ODPs to become non-reusable. Recently, the Rochester lab provided a fix that eliminates the non-reusable ODPs even if the application oscillates the AUTOCOMMIT setting. The required fixes are SI07460 for V5R1 and SI08248 for V5R2.

Troubleshooting

Troubleshooting a multi-tier application may be a bit tricky, because you need to deal with software components that reside in separate partitions. Our experience shows that two tools are particularly useful for pinning down the potential problem areas:

- The joblog messages for a database server job usually provide enough details to isolate DB2 runtime issues.

- The ODBC trace utility provides granular information about the data flow between your Linux application and the database server job.

Job Log Messages

As mentioned earlier, an ODBC client communicates

with a corresponding iSeries server job. This server job runs the SQL requests

on behalf of the client. More precisely, when an ODBC client submits an SQL

statement, the statement is passed to a server job that, in turn, calls the DB2

runtime to execute the statement. The results are then reformatted and marshaled

to the client. The iSeries database access server jobs are called QZDASOINIT,

and they run in the QUSRWRK subsystem. At any given time, there maybe a large

number of database server jobs active on the system, so the first step is to

identify the job that serves your particular ODBC connection. The easiest method

to accomplish this task is to run the following CL command from the OS/400

prompt:

Here, DB2USER is the user profile you use to connect to the iSeries

system. Note that a QZDASOINIT job is assigned to an ODBC connection

after the connection has been established, so you need to set a

breakpoint in the client application below the SQLDriverConnect API

call.

Once the Work with Object Locks appears, key in 5 (Work with Job)

next to the only QZDASOINIT job that should be listed. This shows the Work with

Job dialog. Select option 10 to display the job log for the database server job.

Now, you can search the job log for any error messages generated by the DB2

runtime.

Sometimes, it's also useful to include debug messages in the

job log. The debug messages are informational messages written to the job log

about the implementation of a query. They describe query implementation methods

such as use of indexes, join order, ODP implementation (reusable versus

non-reusable), and so on. The easiest way to instruct the iSeries to include the

debug messages is to specify the TRACE=4 keyword on the connection string.

Here's an example:

A sample of the job log messages with debug messages enabled is shown

below, in Figure 8:

|

Figure 8: Job log messages with debug messages enabled

looks like this.

So the job log offers fairly detailed info on

how the ODBC client requests were implemented by the DB2 runtime.

ODBC Trace

The iSeries ODBC driver provides the cwbtrc utility,

which can be used to collect detailed client-side traces and logs. Rather than

writing all the data out to a daemon and letting it format and store the

data--which is how the Windows tracing operates--the Linux tracing writes

everything straight out to ASCII text files.

The tracing is switched off

by default. We recommend that you use the following parameters when invoking the

cwbtrc utility:

The /dt:1 parameter turns the Detail Trace on, while /hl:1 turns the

History Log on. At this point, tracing is activated and no further configuration

is required. The trace files will be placed in the .iSeries_ODBC directory

located in your home directory. The output files have the following naming

convention:

- cwbdetail-

-pid.csv - cwbhistory-

-pid.csv

See

the

driver's online documentation for specific syntax and functionality

of other cwbtrc parameters.

The Detail Trace and the History Log

store their data in semi-colon delimited text files. Figure 9 shows a short

excerpt from a sample Detail Trace that shows that the SQLDisconnect call failed

because the connection had a pending transaction:

|

Figure 9: This sample Detail Trace shows that the

SQLDisconnect call failed.

Detail Trace contains the following

columns:

- Date

- Time or Tick Count (depending on configuration)

- Component

- Process ID

- Thread ID

- Trace ID

- Trace Data

The History Log contains these columns:

- Date

- Time or Tick Count (depending on configuration)

- Component

- Process ID

- Thread ID

- History Data

If your trace files exceed the maximum size, they will wrap and begin writing at the beginning over old data. When this happens the last entry in the file will be above a line that merely reads " ".

Sometimes, IBM service may request that you also collect the SQL.log. This

utility is provided by the unixODBC driver manager and can be either enabled

through ODBCConfig or manually configured in the odbc.ini. Here's an example of

the appropriate entry in odbc.ini:

Trace = Yes

Trace File = /tmp/sql.log

Additional Information

ITSO offers two Redbooks that can be helpful to

those who want to learn more about OS/400 Linux integration:

- Linux Integration with OS/400 on the IBM--iSeries Server (SG24-6551)

- Linux on the IBM--iSeries Server: An Implementation Guide (SG24-6232)

Jarek Miszczyk is the

Senior Software Engineer, PartnerWorld for Developers, IBM Rochester. He can be

reached by email at

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment.

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment. TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now.

TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now. Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works.

Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works. Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include:

Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include: Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online