If you were to pick the topics in your IT career that rate the highest in stress production and heartburn generation, what would they be? Judging by the questions I receive, my guess is that system security would be near the top of your list. And given the amount of press that security issues generate nowadays, it's no wonder. It's enough to make you consider a career in basket weaving!

Fortunately, things aren't as dismal as the press would lead you to believe. If you're going to deploy a network-connected Linux box, then you will be running a fairly secure machine. According to the Honeynet Project, the mean survival time (before the machine is compromised) for an unpatched Linux system was three months as of the end of 2004 (up from 72 hours in 2001 to 2002). The trend is toward an even more robust out-of-the-box installation. Thus, it's not likely that your machine will get cracked the moment you plug it in and before you apply updates.

This month, I'll share some things I've learned about hardening a Linux box. This article is not meant to be a comprehensive treatise on the subject; instead, it is a summary of tips I have picked up that will help you successfully fend off attacks from the evil-doers of the digital world. While Linux-centric, many of the tips are applicable to other operating systems as well.

Partitioning

The time to start the process of hardening your machine is prior to the OS installation. A little planning can go a long way toward a secure and easily administered installation.

Let's start by looking at the most basic step in any OS installation: partitioning the hard drive(s). Users familiar with Microsoft Windows systems are accustomed to a single "C" partition that engulfs the entire drive or a minimal "C" drive for the OS and a "D" partition that engulfs the remainder of the drive. One of these two options seems to be the standard configuration of a factory Windows installation. Unfortunately, many of the installers used by the Linux distributions are starting to follow this poor example. For instance, the default configuration for DiskDruid (the partitioning tool used by Red Hat Linux and its derivatives) has three partitions: a boot partition (strategically placed first so as to work with old system BIOSes), a swap partition, and a system root partition that holds everything. While this scheme does have the advantage of expediency, it has many disadvantages that make it a horrible choice. In my years of using OS/2, I always created at least three partitions: one for the OS, one for the applications, and one for the user data. Now that I've been using Linux, I've extended my list to include a partition for temporary files, one for applications, one for user data, one for "served" data (e.g., by HTTPD, SMB, or FTP), one for logs, and a few others.

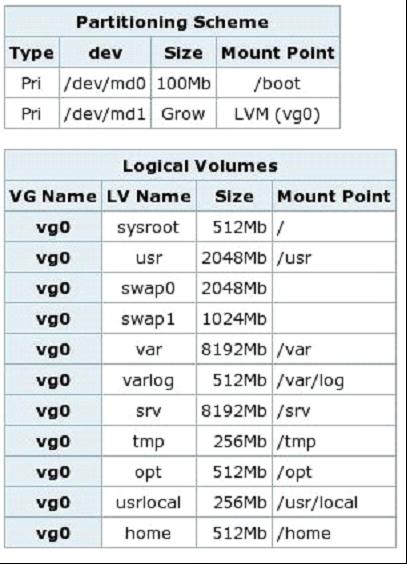

Figure 1 shows the partitioning scheme for one of my typical Web servers.

Figure 1: This example shows just how far I go to separate partitions on a disk based upon their usage. (Click images to enlarge.)

As you can see, I have carved up my drive rather finely (some would say too finely). The reasons for my choice in this regard all boil down to one thing: control. With the disk divided like this, I can do the following:

- Enforce disk quotas—The Linux quota system is based on partitions. If I don't split up the partitions, then I'll have to ensure that the quota for each daemon user is set appropriately. What a pain that can become!

- Mount individual partitions with special properties, such as read-only or noexec—The benefit to mounting a partition read-only is obvious. If the information on the drive normally isn't going to change, then why give someone the opportunity to change it? Of more interest is noexec. When a partition is mounted with the noexec option, it is precluded from running any binaries that appear on that partition. I commonly mount /tmp with the noexec option, since that directory is accessible to all users for temporary use. One of the common attack vectors for dynamic Web sites (such as PHP) is to send carefully crafted Uniform Resource Identifiers (URIs), which cause the interpreter or Web server to write a binary to the /tmp directory, from where it is then launched under the HTTPD daemon's user ID. The noexec mount option effectively closes that vector.

- Format the partitions with an appropriate file system—With Windows, you have few choices on what file system you use. You're typically limited to NTFS, VFAT, or one of their close relatives. With Linux, you have many choices, each with advantages in certain areas. For example, if I decided to use a ReiserFS partition (which seems to run rings around everything else when it comes to huge numbers of small files), I can easily create one.

- Prevent runaway processes, logs, or malware from filling the system partition, thus bringing down the system—Let's face it: Almost any operating system can be brought down simply by filling its disk drives. Separate partitions ensure that logs can grow to fill just one partition and that users can fill only so much space (via quotas).

Choosing the initial size for each partition can be somewhat tricky and can vary quite a bit depending on what the server will be used for. The values shown in Figure 1 are a good starting point for my basic Web servers, which typically have at least a 32 GiB hard drive installed. If you total up the sizes of all of the partitions, you'll note that there is at least 10 GiB of free space on this drive. I use logical volume management (LVM) on my systems, so if I find a partition starting to become starved for space, I can easily add more on the fly. Thus, the biggest objection to my partitioning strategy ("How do I know how big to make each partition?") is rendered moot.

Install Only What You Need

One of the most fundamental tips I can offer to secure your system is to install only those packages that you need to fulfill your system's mission. While it may be tempting to click the "Install everything" button, you really have to plan a bit to ensure that you aren't helping any cracker who might somehow gain access to your system. Does your Web server really need the GCC compiler suite, for example?

While this planning phase used to be time-consuming, modern installers usually have good "purpose" selections in them. As an example, I'll turn once again to the Red Hat Enterprise Linux installer. At one point during the installation, you'll be asked to select from Server, Workstation, Custom, or Minimal configurations. Assuming that you choose something other than Custom (which will require additional input from you), the most commonly used packages for a given purpose will be chosen for installation.

The nice thing about the commercial Linux distributions is that you can relatively easily add or remove packages once your system is running. So don't get too strung out trying to get everything installed immediately. If you're a minimalist, then choose a "minimal" installation; you easily can add the missing software later. If you want someone to do more of the work for you, then choose a server or workstation installation (whichever is more appropriate). Once the installation is completed, you'll usually find everything that you need for that purpose is installed, albeit disabled until you overtly enable it. Should you determine that some of the installed packages are superfluous, then the package managers make it easy enough to remove them.

Keep Updated

Keep your software updated. Period. This tip seems obvious, but it bears repeating, many times. The beauty of open-source software is that the updates come quickly and usually before there are known exploits. Most of the top Linux distributions provide the means to easily update the installed software. Avail yourself of it. Subscribe to the errata and security mailing lists for your distribution, and keep on top of applicable fixes for your system. Then apply them!

Microsoft takes a considerable amount of heat for security problems relating to its software (and rightfully so). But it shouldn't have to take heat for the vulnerabilities it has addressed that continue to be exploited in unpatched systems. The same applies to open-source software. Exercise due diligence and keep your systems up-to-date.

Security Through Obscurity

Security through obscurity—it's the least secure means of securing anything and the means most commonly used by parents around Christmas time. How many of you can honestly say that you went through your entire childhood oblivious to what Santa had hidden away for you? Security through obscurity just isn't very effective, yet it does serve some purpose.

I administer my remote sites using OpenSSH to connect to them from my office. The well-known port for SSH connections is port 22. And that's where all of the script kiddies of the world are looking to perform a brute-force dictionary attack. When I want to connect to a machine, I change the port in my SSH daemon's configuration file and then specify that port. A determined cracker won't be fooled by this ploy for very long, but given that the majority of the black hats nowadays are simply cracker-wannabes (using software written by someone else), they'll be stopped before they even get started. At the very least, the log files won't be filled with the voluminous "invalid login attempt" messages that these attacks generate.

If you can use an alternate port for one of your connections, then consider doing it. Changing your SMTP server's port may not be the best choice, but you may find others (like the aforementioned SSH port) where you could do it. Why not take advantage of this low-tech and inexpensive security enhancement? I'll revisit SSH again in the section on local access.

Use the Firewall

The security buzzword that everyone knows and many utter in hushed tones is "firewall"—purportedly the all-powerful gatekeeper that protects machines from malicious intruders. We all know what firewalls are and we all know we should use them, so that's all that needs to be said, right? Well, no.

I assert that firewalls, while powerful tools, are only minor players in the security game when configured as most people configure them: "Be strict on incoming connection requests, but loosen up on outbound connections." In other words, the firewall is only going to allow inbound connections to the ports that we specify, such as ports 80 and 443 (HTTP/HTTPS) or port 25 (SMTP), but any program running on our machine can connect, unfettered, to anywhere on the outside. Frankly, a properly configured bastion host will provide exactly the same level of protection as a firewall so configured.

Be assured that I am not dismissing the use of a firewall. Quite the contrary. Even this simple firewall configuration (which, by the way, the Red Hat installer will help you create during installation) will keep you from shooting yourself in the foot. If you inadvertently configure a daemon that accepts connections from the external interface, then the quick-and-dirty firewall will at least give you a modicum of protection.

I just want to remind you that a firewall is not a panacea for your security concerns. You should be sure that your system is properly configured so as to be reasonably secure even without the firewall in place. Proper configuration of a firewall for outbound connections is a subject unto itself and is beyond the scope of this article.

Limit Local Access

When arguments flare up over the relative security of Windows versus Linux, you'll often see the number of patches issued over a period of time used as a metric for comparison. I won't offer my opinion on this matter except to point out that the lion's share of patches for Linux are for issues related to users with local access, not access through service interfaces. The cracker's ultimate goal is to obtain shell (command line) access to your server. Thus, your ultimate goal should be to prevent it.

If you have users other than yourself who have access to your server, then things start to get hairy. Configuring chroot jails for services like FTP is straightforward, and a ton of documentation has been generated to help you in this task. I encourage a good Google search if you're interested. Shell access is a much bigger challenge to lock down. Granted, the users to which you provide this level of access should be trustworthy. And the things that they should be able to access from a command line are no different than in traditional multi-user UNIX environments. You should not be too concerned if a trusted user is logged into your server, provided you have properly secured the various files and directories.

What's the easiest way to provide secure shell access to your trusted users? Turn off Telnet (which is the default nowadays) and direct your users to access the machine using SSH. As I mentioned earlier, keep the script kiddies at bay by moving the SSH port from 22 to something above 1024. And for the ultimate protection, turn off SSH password authentication and rely on public key authentication instead. By doing this, you won't give anyone the chance to use a dictionary attack against your server. You do have some minimal work involved with a server configured this way (specifically, each new user needs to provide you with her public key so that you may place it on the server prior to her first attempt to log on), but the rewards are well worth the effort. A short primer on this setup can be found here.

I wouldn't consider this discussion complete unless I reminded you to pick strong user names and passwords. Create temporary and demonstration accounts with definite expiration dates, lest you fall into the same quagmire I did.

The only time a system of mine was compromised was on an older Red Hat 7.3 machine. I created a user profile for demonstration purposes and then failed to remove it when I was done. Since the box in question wasn't normally exposed to the Internet, I was lax in this important detail...and I got caught. Once I placed that machine on the 'net, it didn't take long for someone to find the OpenSSH daemon listening on port 22, allowing password authentication. A short dictionary attack was all it took to find the weak user name and password. Fortunately, I was using the machine at the time of the attack and noticed the sudden CPU spike, so I got to actually watch the attack in progress. I allowed it to go on until I felt that the black hat was getting ready to cover his tracks. I pulled the plug on the Ethernet connection, saved the logs and other files his work had produced for future study, and then wiped the machine and reloaded it with CentOS.

I hope that a word to the wise is sufficient....

The Future of Linux Security

What does the future hold? The latest tool for securing Linux comes from the National Security Agency, and it's called Security Enhanced Linux. SELinux adds mandatory access controls to the kernel, enabling much finer-grained control over who can access what. A server configured with SELinux can be made virtually crack-proof (I'm not exaggerating).

In the current discretionary access control (DAC) model, access is granted to a user based on either who he is or what groups he belongs to. Once a cracker gains access to the system (shell or buffer overflow), then the software he subsequently runs has the rights of the compromised user. SELinux introduces the concept of mandatory access control (MAC) to Linux, which adds a third element to the security mix: context (in other words, what the user should be doing). For example, if you create a user under which the HTTPD daemon will run, you can limit it to only those things that a Web server daemon should have access to. Should the HTTPD daemon get cracked, then the attacker can only do those things that a normal Web server daemon would be expected to be doing. Anything else will be disallowed. With SELinux, even the omnipotent root user can be contained. There's much more to SELinux than this, and I encourage you to check it out for yourself. I find it exciting, since it nips in the bud the implications of a yet-to-be-discovered buffer overflow or other exploit.

It's Not All That Bad

As you can see, there's really nothing onerous about building a secure Linux box. The distributions are getting better all the time at delivering a solid configuration right out of the box. All you need to do is a bit of planning before you start.

In this article, I have delineated some tips that I have found useful with my Linux boxes. I'm sure there are many more that I haven't discussed. There are plenty of "Linux hardening" books on the market, and I encourage you to pick up one and give it a read. If I've missed your favorite tip, I welcome your emails!

That's it for me this year. Enjoy the upcoming holidays, and I'll see you in 2006.

Barry L. Kline is a consultant and has been developing software on various DEC and IBM midrange platforms for over 23 years. Barry discovered Linux back in the days when it was necessary to download diskette images and source code from the Internet. Since then, he has installed Linux on hundreds of machines, where it functions as servers and workstations in iSeries and Windows networks. He co-authored the book Understanding Linux Web Hosting with Don Denoncourt. Barry can be reached at

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment.

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment. TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now.

TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now. Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works.

Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works. Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include:

Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include: Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online