The connection string is the bridge between the SQL world and the QSYS world of the IBM i.

I have recently been spending a lot of time working with a couple of concepts: data warehouses (and other external database access) and disaster recovery. These two concepts are fundamentally related, and like so many things in the IBM i world, some well-planned architecture decisions up front will make the back end very easy. In this case, we'll be talking about the connection string used by external processes to talk to your enterprise data on the IBM i.

Some Assumptions

I've already begun making some assumptions in the opening paragraph, so I might as well make the entire list:

- All enterprise data resides on the IBM i.

- Data in external sources (such as data warehouses) is staged from the IBM i.

- Business logic resides on the IBM i, not in the data warehouse.

From a process standpoint, this means that the data warehouse is responsible only for manipulation and presentation of data provided by the i. I call that the "slicing and dicing" in which users can see the enterprise data in various grouping and aggregations. This doesn't mean there are no calculations in the data warehouse. On the contrary, I'm all for providing transactional data (such as sales) to external tools that can do the computational heavy lifting of forecasts and then provide all the nice graphical dashboards that management needs to make decisions.

But note that the enterprise data—the day-to-day underlying bones of the business, from purchasing to production to payments—resides on the IBM i. We enter it there, we store it there, and we make it available to whoever needs it. We may be able to simply lift data points, or we may need to do some complex data-driven logic, but at the end of the day, the data comes from the enterprise, and the data warehouse packages and presents it.

The Bright Red Line

So where is the line that separates calculations on the server from calculations in the data warehouse? As always in architectural decisions, there may be a bit of wiggle room, but I do have a pretty firm guideline: if a calculation conditionally accesses different tables under different use cases, then it definitely belongs on the server (and in my world, it should be written in RPG, not SQL).

Let's consider an example. My environment uses two different costing models: standard cost and average cost. The type of cost used in a given situation depends on a number of factors. Add to that the fact that some cases may need the current cost, while others may require a point-in-time cost, where you specify a date and the system tells you the cost of that item on that date. Given that complexity, I wrote an RPG program to determine the cost, and for external data consumers I wrapped it in a user-defined function (UDF).

And that's what we're going to be talking about today. How to access stored procedures and UDFs using an IBM i-aware connection.

Libraries Are Like Schemas, Except When They're Not

Creating your first stored procedure and UDF isn't really all that difficult; awhile back I explained the process in two articles. But like so many things in programming, it's not the initial proof of concept that requires the architectural underpinnings; it's the nuances of using those concepts in a live environment.

My environment separates the object library and the data library. This isn't a problem on the IBM i; that’s what library lists are for! But it does present the first challenge for external access, since standard SQL usually thinks about only a single schema (a schema is roughly equivalent to a library in QSYS terms). I could get around this at first by specifying the data library as the primary schema and then qualifying the function. I might say something like this:

SELECT IMITEM, IMDESC, PRODOBJ.ITM_COST(IMITEM) FROM ITMMST

The problem is that the ITM_COST function calls a stored procedure, ITM_COSTGET. That procedure is also in PRODOBJ, and that means it won't be found unless I qualify the library in the function (I literally tell the function ITM_COST to call ITM_COSTGET in PRODOBJ). So now I've got the library name PRODOBJ hard-coded in a couple of places. This is the stuff that architectural nightmares are made of. The first thing that goes wrong is when you need to test changes. In the IBM i world, we tend to test by putting the test library on the top of our library list. We just add TESTLIB on top and any programs in that library take precedence over the versions in PRODOBJ.

Unfortunately, our UDF call is qualified, and it calls a qualified program, so there's literally no way to make it use the test program. Instead, we'll need a different version of the UDF and a different call to that UDF. That's not entirely horrible at first, but then you have to promote the function. Well, you actually have to create a new object and change the qualified name of the called procedure. The real danger here is that you're essentially required to do double maintenance, keeping two completely separate objects in sync (but with slight differences!). The first time you forget to update the production version—or worse, put the test version into production—you'll understand just how painful that can be.

The problems only multiply when you’re talking about databases, especially when the files exist in multiple libraries. For example, our primary test box has three environments, each with the same database file names but in different libraries, and there are multiple libraries per environment. For the green-screen, this is really easy: just set your library list according to the environment you wish to use. But with SQL's single-schema concept, there's no way around it. In fact, it's really quite difficult. Every SELECT statement has to qualify all of the tables, except those in one library. In order to use a different set of libraries, you have to change all of those qualified names.

It's disaster waiting to happen.

I Wish There Were a Schema List!

So what's the solution? Is there a way to make an SQL connection library list–aware? As it turns out, there is, but it isn't entirely intuitive. One part is pretty straightforward, and the other is much less obvious. Also, the setup is slightly different between the two primary connection types, ODBC and JDBC. I'll walk you through the JDBC connection here, using one of my favorite tools, DBeaver.

Figure 1: Start by creating a DB2 for iSeries connection.

DBeaver is really helpful in that it understands what an iSeries is and is able to create most of the defaults for you. Okay, it still thinks we work on iSeries (and the “AS 400,” whatever that is), but still, that's better than not knowing what the system is at all.

Figure 2: In the Database/Schema prompt, enter *LIBL followed by your library list separated by commas.

Specifying the library list is the straightforward part. The only weirdness is having to enter *LIBL to tell the system that it's a library list, but it works as shown. Now any stored procedures in PRODOBJ and any tables in PRODDTA1 or PRODDTA2 can all be accessed without having to qualify the library name. To use different libraries, set up a different connection with the required libraries.

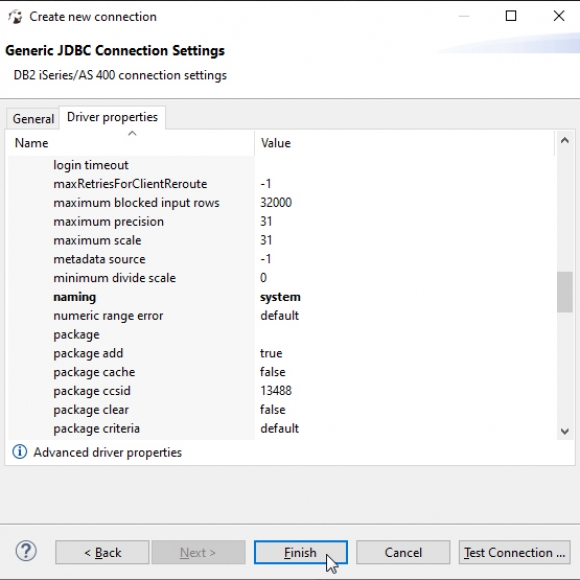

Figure 3: Change the naming property from sql to system.

This is the nonintuitive part. In the Driver properties tab, you'll see the naming property. It has two values, sql and system, and the default is sql. You don't want the default; you want the other value, system. So select that value.

Figure 4: That's all that's required. Now you can hit Finish.

That's it. This connection will now honor the library list you specified in Figure 1. And the beautiful part of this is that now you can run the same statement in a completely different environment just by creating a new connection with the required library list and connecting to it instead.

Additional Nuances

There are some additional nuances that I'll cover in subsequent articles. In particular, creating objects (tables, views, stored procedures, and functions) requires a little more work. But this is all you need to start creating a robust multi-platform environment where SQL and the IBM i work together seamlessly.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online