With the right lakehouse implementation, all your data can be accessed, managed, and governed to drive deeper, thereby delivering more complete insights, resulting in smarter business outcomes. But not all lakehouses are born equal.

Editor’s Note: This article is an excerpt from the book The Lakehouse Effect: A New Era for Data Insights and AI.

Data is at the center of every business. It keeps applications running, powers predictive insights, and enables better experiences for customers and employees. However, the full benefit of data is elusive because of the way data is stored and accessed for analytics and Artificial Intelligence (AI).

Enterprises that rely on monolithic repositories with multiple data warehouses and data lakes located on premises and on cloud are far from alone. Many organizations are inhibited by data silos. History has shown that the amount of stored data will continue to grow at an accelerated rate.

The data lake concept was supposed to fix all these issues; just land company data in a centralized place and process it. But it’s not so easy to update the lakes, properly catalog data, or ensure good governance—and the skillsets required for these tasks are specific, rare, and expensive. As a result, data lakes have proven more costly to build and maintain than originally perceived. A data warehouse does offer high performance for processing terabytes of structured data, but warehouses can become expensive, too, especially for new and evolving workloads. Most organizations run analytics and AI workloads in ecosystems that are complex and cost-inefficient. It’s time for a change.

For AI to be adopted by the masses, it needs to be accessible and as easy to use as turning on a light switch. Users must be able to rely on it. Trust it. Let it do its thing. To do that, though, AI needs to be able to access and consume its life source (all data) simply so that it can apply its models intelligently and transparently, enabling businesses to discover and act on insights to produce smarter business outcomes.

The Lakehouse

A lakehouse combines the best capabilities and features of data lakes and data warehouses. A lakehouse has the potential to be a one-stop shop for an enterprise to store and access all of its data. A lakehouse that is built on an architecture that uses open standards, open-source components, and execution engines that are optimized for different workloads can enable AI systems to access everything they need. This could potentially make those AI systems become close to being sentient within the enterprise. For these reasons, the lakehouse might be one of the most important data management advances since the birth of the Relational Database Management System (RDBMS) in 1983.

Of course, governance—whether general governance, data governance, or AI—will remain central to the success of implementing a lakehouse. AI, data, and governance are symbiotic, like the three legs of a tripod. If one leg fails, the other two fall over and the whole thing comes tumbling down.

Every workload is unique and should be optimized with the best-suited environment to keep cost at a minimum and performance at a maximum. Organizations need a lakehouse that delivers an optimal level of performance for better decision-making, along with the ability to unlock more value from all types of data, resulting in deeper insights.

IBM watsonx

IBM watsonx is an AI and data platform designed to enable enterprises to scale and accelerate the impact of the most advanced AI with trusted data. Organizations turning to AI today need access to a full technology stack that enables them to train, tune, and deploy AI models, including foundation models (explained below) and machine-learning (ML) capabilities, across their organization with trusted data, speed, and governance—all in one place and to designed run across any cloud environment.

Unlike traditional machine learning, where each new use case requires a new model to be designed and built using specific data, foundation models are trained on large amounts of unlabeled data (data that does not have its characteristics, properties, or classifications tagged with it), which can then be adapted to new scenarios and business applications. A foundation model can therefore make massive AI scalability possible while amortizing the initial work of model building each time it is used because the data requirements for fine-tuning additional models are much lower. This can result in both increased ROI and much faster time to market.

Foundation models can be the basis for many applications of the AI model. Using self-supervised learning (defined by its use of labeled data sets to train algorithms that classify data or predict outcomes accurately) and fine-tuning, the model can apply general information it has learned to a specific task.

With watsonx, users have access to the toolset, technology, infrastructure, and consulting expertise to build their own or fine-tune and adapt available AI models on their own data and deploy them at scale in a trustworthy and open environment. Competitive differentiation and unique business value will be able to be increasingly derived from how adaptable an AI model can be to an enterprise's unique data and domain knowledge.

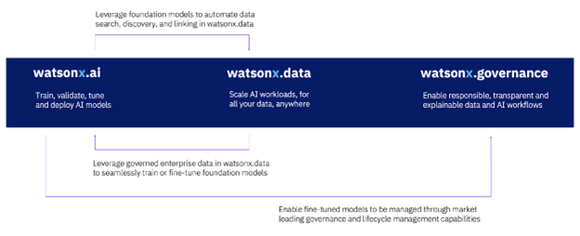

The IBM watsonx platform consists of three unique product sets to help address these needs, as shown in Figure 1:

Figure 1: Scale and accelerate the impact of AI with trusted data using IBM watsonx

IBM watsonx.data

Focusing on the data side of things, IBM watsonx.data is an optimized for all data, analytics, and AI workloads. IBM watsonx.data is designed to help organizations:

- Access all their data and maximize workload coverage across all hybrid-cloud environments. Expect seamless deployment of a fully managed service across any cloud or on-premises environment. Access any data source, wherever it resides, through a single point of entry and combine it using open data formats. Integrate into existing environments with open source, open standards, and interoperability with IBM and third-party services.

- Accelerate time to trusted insights. Start with built-in governance and automation; strengthen enterprise compliance and security with unified governance across the entire ecosystem. A click-and-go console helps teams ingest, access, and transform data and run workloads. The product provides a dashboard that makes it easier for organizations to save money and deliver fresh, trusted insights.

- Reduce the cost of a data warehouse via workload optimization across multiple query engines and storage tiers. Optimize costly warehouse workloads with fit-for-purpose engines that scale up and down automatically. Reduce costs by eliminating duplication of data when the enterprise uses low-cost object storage; extract more value from the data in ineffective data lakes. Savings of course, may vary depending on configurations, workloads, and vendors.

Organizations typically find they are often at one or more of these three stages:

- Remaining on traditional warehouse or analytic appliances but looking for ways to get greater flexibility and to also perhaps tackle new workloads

- Have adopted the traditional data lakes but are running into issues of getting sufficient return on their investment and having to manage those systems

- Have adopted the cloud data warehouses but are concerned with ever-increasing billing costs

All three of these groups are looking for ways to get more flexibility, adopt more workloads, reduce costs, and reduce complexity.

IBM watsonx.data is designed to address the needs of all three groups and the shortcomings of some first-generation lakehouses. It combines open, flexible, and low-cost storage of data lakes with the transactional qualities and performance of a data warehouse. This enables data (structured, semi-structured, and unstructured) to reside in commodity storage, bringing together the best of data lakes and warehouses to enable best-in-class AI, BI, and ML in one solution without vendor lock-in.

Some of the key capabilities of watsonx.data are:

- It scales for BI across all data with multiple high-performance query engines optimized for different workloads (for example: Presto, Spark, Db2, Netezza, etc.).

- It enables data-sharing between these different engines.

- It uses shared common data storage across data lake and data warehouse functions, avoiding unnecessary time-consuming ETL/ELT jobs.

- It eradicates unnecessary data duplication and replication.

- It provides consistent governance, security, and user experience across hybrid multi-clouds.

- It leverages an open and flexible architecture built on open source without vendor lock-in.

- It can be deployed across hybrid-cloud environments (on-premises, private, public clouds) on multiple hyperscalers.

- It offers a wide range of prebuilt integration capabilities incorporating IBM data-fabric capabilities.

- It provides global governance across all data in the enterprise, leveraging the IBM data-fabric capabilities.

- It is extensible through APIs, value-add partner ecosystems, accelerators, and third-party solutions.

Modularity and flexibility are key when implementing a lakehouse. If an organization has a Hadoop data lake with data stored on Hadoop Distributed File System (HDFS), the metadata can be cataloged using Hive, and the metadata and data can be brought to the lakehouse (watsonx.data) so that, from day one, the most appropriate engines can be used to query the data. New data arriving in the lakehouse needs to be integrated with existing data using the metadata and storage layers (Hive and HDFS) and continuously analyzed without affecting existing applications using the data lake. Over time, data can be moved into the data lake at an organization’s own pace.

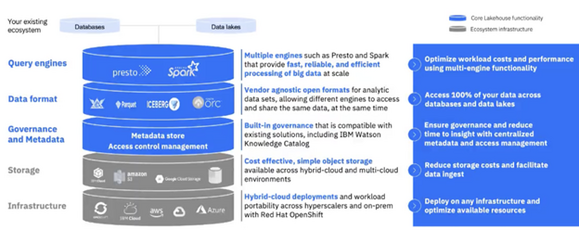

Many of the watsonx.data components shown in Figure 2 are based on open-source technologies such as Presto, Iceberg, Hive, Ranger, and others. IBM watsonx.data also offers a wide range of integration with existing IBM and third-party products.

Figure 2: Overview of watsonx.data components

AI cannot exist without information architecture (IA). An IA such as a data fabric forms a crucial foundation for a client's data infrastructure, facilitating the realization of AI's benefits. Its primary function is to gather, prepare, and organize data, making it readily available for consumption. This data preparation is crucial for maximizing an organization’s AI capabilities. Once the data is properly organized, it can be readily accessed and used by AI builders using watsonx—more specifically, watsonx.ai and watsonx.data.

Last, but by no means least, organizational culture plays an important role in adopting and implementing AI. Simply put, organizations and people that embrace and trust AI have the potential to outperform those that don’t. Organizations that don’t do so face the threat of extinction.

Next Steps

In closing, lakehouses that offer the capabilities and that form part of an integrated AI and data system like those discussed in the book The Lakehouse Effect: A New Era for Data Insights and AI provide the potential for organizations of any size to leverage AI as a consumable service with which anyone can interact.

AI should be as easy as driving a vehicle without having to know the inner workings of a combustion or electrical engine. AI should be perceived as reliable, trustworthy, and as safe as traveling in an airliner. AI should also be as consumable as flicking a light switch on a wall, trusting that the light will enable everyone to see more clearly. Simply ask an AI system a question or give it a task and it will do the work faster, more accurately, and more intelligently than humans alone.

In summary, AI can be the key to business enlightenment. It can help us step out of the dark, unwrapping the DNA buried deep within our organization’s data and business processes to produce the most revealing and deepest insights that help us achieve smarter business outcomes.

I hope you succeed in all your data and AI adventures. The new book The Lakehouse Effect: A New Era for Data Insights and AI helps you to take that first or next step on your journey.

Having read this excerpt, if you are interested in reading the whole book, you can download it at no cost here.

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn: Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

LATEST COMMENTS

MC Press Online