Combining encryption and tokenization is often the best data protection strategy for IBM i-centric organizations that want to reduce risk substantially and lower compliance costs.

A few years ago, data security was largely a matter of providing firewall protection for the network and sending sensitive data from Point A to Point B through a secure connection. Today, with the plethora of data security industry mandates, data privacy laws, high-profile data breaches, and ever-savvy cybercriminals changing their attack strategies, organizations have much more at stake. Reducing the risk of a data breach is paramount for meeting compliance regulations, maintaining customer loyalty, and protecting the brand.

Fortunately, there are multiple existing and emerging technologies that work well in a distributed IBM i-centric IT environment to protect data and reduce overall risk. These include data encryption and a newer technology, data tokenization. A year ago, CISOs typically viewed encryption and tokenization as competing technologies: it was a case of either/or. Now, best practices are showing that for many organizations a hybrid approach is the most effective solution for limiting risk. This article explores how encryption and tokenization can be employed to protect both cardholder information and personally identifiable information (PII) in an IBM i environment.

A Quick Look at Encryption

When networks were private—before the rise of the Internet—data was rarely encrypted. Encryption was primarily used only to protect specific information, such as passwords. Today, however, networks are almost always interconnected to other networks, and companies rely on the Internet to access and transmit all types of information. Many types of information must be protected at all times from increasingly sophisticated and rapidly evolving threats, whether that information is traversing a network (in motion), stored on a system (at rest), or being processed in an application (in use).

File-level encryption is necessary when an application uses a file rather than a database to store sensitive data, such as when storing data on a network, in an application, or on backup media. This level of encryption typically requires some modifications to the original application because new read-and-write commands that will invoke encryption and decryption components need to be inserted. In most cases, these modifications can be automated through scripts and filters that scan the applications and do find-and-replace operations, thus minimizing the effort.

Sensitive data also needs to be encrypted and decrypted at the database field level. Database field encryption is accomplished without any changes to the application or without accessing the database in most scenarios. Any necessary modifications are implemented in the database itself.

Tokenization: A Data Security Model on the Rise

Tokenization—the data security model that substitutes surrogate values for sensitive information in business systems—is rising rapidly as an effective method for reducing corporate risk and ensuring compliance with data security standards and data privacy laws. For companies that need to comply with the Payment Card Industry's Data Security Standard (PCI DSS), it's also lauded for its ability to reduce the cost of compliance by taking entire systems out of scope for PCI assessments.

Tokens replace sensitive data such as credit card numbers, Social Security numbers, and birthdates in applications and database fields with substitute data that cannot be mathematically derived from the original data. The sensitive data is simultaneously encrypted and stored in a central data vault, where it can be unlocked only with proper authorization credentials. A token can be passed safely around the network between applications, databases, and business processes, leaving the encrypted data it represents securely stored in the data vault.

A format-preserving token can be used by any file, application, database, or backup medium throughout the enterprise, thus minimizing the risk of exposing the actual sensitive data while also allowing business and analytical applications to work without modification. Unlike encryption alone, tokens can be generated so that they maintain the format of the original sensitive data, limiting changes to the applications and databases that store the data.

Because tokenization reduces the number of points where sensitive data is stored by locking the ciphertext away in a central data vault, sensitive data is easier to manage and more secure. It's much like storing the Crown Jewels in the Tower of London or the U.S. official gold reserves at Fort Knox. All are single repositories of important items, well-guarded and easily managed. This provides a greater level of security than traditional encryption, in which sensitive data values are encrypted and the ciphertext is returned to the original location in an application or database, with a greater level of exposure.

Reducing Risk with Tokenization

Technology plays a big role in reducing risk, but the other part of risk reduction is people. Because tokenization removes sensitive data from applications and databases, it allows companies to limit the number of employees who can see the data without compromising their ability to do their jobs. Because format-preserving tokens allow business processes and analytics to run just as they did using unencrypted or encrypted data, employees can still perform their duties even though they no longer have access to the sensitive data. Take the example of a call center rep at a utility company who routinely accesses credit card numbers to verify customers and answer questions. Tokens can be generated that would use the last four digits of a credit card number, thus allowing the operator to validate the caller's identity without seeing the entire card number. Under this scenario, the majority of call center operators would no longer have or need permission to see the actual numbers, thereby significantly reducing the risk of internal theft.

With Format Preserving Tokenization—a versatile variation of the tokenization model that is equally adept at protecting cardholder information as it is personal and patient information—the relationship between data and token is preserved, even when encryption keys are rotated. Since the token server can maintain a strict one-to-one relationship between the token and data value, tokens can be used as primary and foreign keys, and referential integrity is assured whenever the encrypted field is present across multiple data sets. And since records are created only once for each given data value (and token) within the data vault, storage space requirements are minimized. Because format-preserving tokens maintain referential integrity, any type of analytics—from loss prevention to customer loyalty or data warehouse—can be run without modification, while removing exposure to sensitive data from employees who can do their jobs without it.

Risk Reduction Scenarios with Tokenization

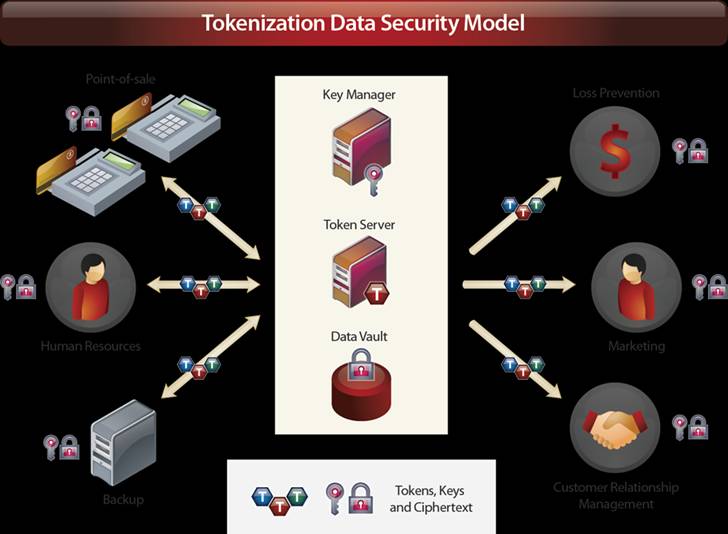

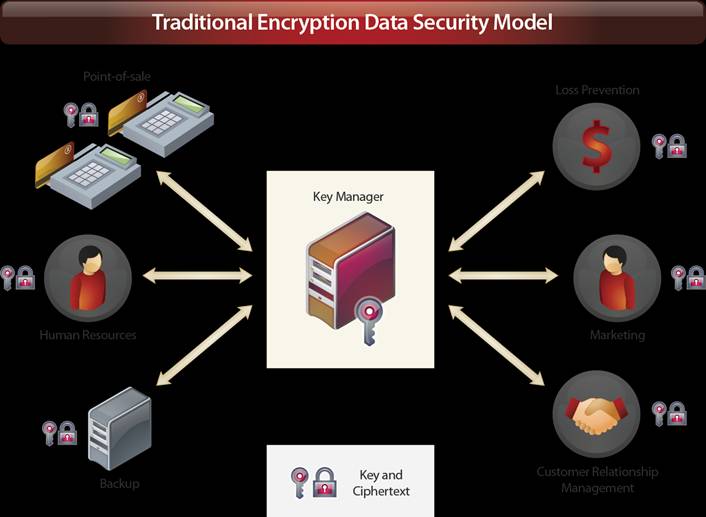

There are two distinct scenarios in which implementing a token strategy can be beneficial for reducing risk: in reducing the number of places sensitive encrypted data resides, and in reducing the scope of a PCI DSS audit. The hub-and-spoke model is the same for both and contains three components: a centralized encryption key manager to manage the lifecycle of keys, a token server to encrypt data and generate tokens, and a central data vault to hold the encrypted values, or ciphertext. These three components comprise the hub. The spokes are the endpoints where sensitive data originates. Spokes can be, for example, the point-of-sale terminals in retail stores or the servers in a department, call center, or Web site.

Figure 1: Here's an example of the encryption model. (Click images to enlarge.)

In the traditional encryption data protection model, data is either encrypted and stored at the spokes or encrypted at headquarters and distributed to the spokes. Under the tokenization model, encrypted data is stored in a central data vault, and tokens replace the corresponding ciphertext in applications available to the spokes, thereby reducing the instances where ciphertext resides throughout the enterprise. This significantly reduces risk, as encrypted data resides only in the central data vault until it is needed by authorized applications.

Figure 1: Here's an example of the tokenization model.

The tokenization model also reduces scope for a PCI DSS audit. In the traditional encryption model, ciphertext resides on machines throughout the organization, both at the central hub and all of the spokes. All of these machines are "in scope" for a PCI DSS audit. In the tokenization model, many of the spokes can use the format-preserving tokens in place of ciphertext, which takes those systems out of scope for the audit.

IBM i—Hub or Spoke? Either Way Works

The IBM i can serve as the central hub for tokenization and the data vault, or it can be a spoke. The IBM i's centralized application architecture is an ideal platform for hosting the token server and key manager and for encrypting and storing information in the data vault. In this scenario, the IBM i is the hub, and the various applications that use tokens—such as point-of-sale systems and loss-prevention systems—are the spokes.

The other option is to put the token server and key manager, along with the data vault (in a DB2 database), on a separate Windows or UNIX distributed platform. In this instance, the IBM i then becomes a spoke.

Another alternative is to run the token server on a distributive environment but use IBM i DB2 to house the data vault, which adds layers of protection and separates the components.

Encryption with Tokenization: The Hybrid Approach

To maximize data security while reducing risk, encryption and tokenization can be combined to protect sensitive data in transit or at rest. While many organizations look to either encryption or tokenization, the hybrid approach works best in several scenarios.

For instance, companies that take credit card numbers through a Web site or by mail have to protect sensitive information as structured data in the case of data entry at the Web site, as well as unstructured data in the form of the scanned document received in the mail. In this case, it makes sense to tokenize the credit card number but use encryption to secure the scanned image, sharing centralized key management for both.

In another scenario, a company might choose to encrypt the credit card at the point of sale and transfer this encrypted payload to the tokenization system for decryption and tokenization. The current alternative is to transfer the card number over a secure channel (for example, HTTPs) rather than the payload itself. When implemented properly, portions of the network where the credit card numbers are being transferred may be removed from PCI DSS audit scope. Therefore, the combination of using both encryption and tokenization help to maximize the reduction in PCI DSS scope and risk.

Centralizing Key Management

The more data that needs to be encrypted, the more difficult it becomes to manage proliferating keys. Key management must be performed in a secure, tamper-proof, available, and auditable manner. Key management must also allow for an infinite variety of lifecycle timelines—from seconds to years—and it must support specific key-handling requirements, such as those mandated by PCI DSS and various government regulations.

A centralized key management solution generates, distributes, rotates, revokes, and deletes keys to enable encryption/decryption and to allow only authorized users to access sensitive data. The solution should also manage keys across disparate platforms and systems so that the encryption keys can be managed centrally across all of the different databases, operating systems, and devices throughout the organization.

A key management solution should not require ciphertext to be decrypted and re-encrypted when new keys are created or existing keys are changed. A key manager automatically generates, rotates, and distributes keys to the encryption endpoints. The key manager ensures that the most recent active key is used to encrypt data and that the correct key is used to decrypt ciphertext.

Decrypting and re-encrypting data for the purpose of rotating keys takes applications and databases offline and potentially exposes sensitive data during the decryption/re-encryption process. Data should only be decrypted and re-encrypted with a different key if a key has been compromised.

Limiting Your Risk

Protecting sensitive and business-critical data is essential to a company's reputation, profitability, and business objectives. In today's global market, where business and personal information know no boundaries, traditional point solutions that protect certain devices or applications against specific risks are insufficient to provide cross-enterprise data security. Combining encryption and tokenization with centralized key management as part of a corporate data protection program works well in an IBM i-centric environment to protect both credit card and personally identifiable information to limit corporate risk and reduce the cost of compliance with data security mandates and data privacy laws. A final thought: tokenizing or encrypting information at the earliest opportunity in the process affords maximum protection for limiting risk.

as/400, os/400, iseries, system i, i5/os, ibm i, power systems, 6.1, 7.1, V7, V6R1

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online