This article takes a look at a high-powered testing technique that can significantly reduce the amount of system test rework for complex systems. If your “system test” task is a large question mark at the end of your Y2K project, you may want to consider implementing regression testing.

You’ve found all the source code for your programs. You’ve winnowed out the systems you no longer need. You’ve determined the time frame for your Y2K conversion and, after painstaking research, selected the right tools to use. You’ve coordinated time schedules with your business partners and service providers. Everything is in place, and conversion starts tomorrow, but something keeps nagging at the back of your brain....

What is it? Think, think, think.... Oh no, that’s it! You forgot the test plan!

Wake up! Wake up! It was just a bad dream! We’ve all heard enough to know that testing has to be an integral part of our Y2K plans. But nobody seems to be telling us exactly how much we need to test. This article introduces a whole range of test types and takes a close look at the far end of the spectrum: regression testing. Like a Humvee, regression testing may be too much for your needs. But, like a Humvee, if you need it, nothing else will do.

Test Types

Whenever any substantive system modification is planned, the same terms tend to pop up: unit testing and system testing. These terms often cause confusion and sometimes outright dissension. Before I discuss the various specific test types, I’ll quickly review these broad categories.

Here’s one definition: Unit testing refers to testing a single program or, at most, a single job stream, while system testing functions at an application level. Notice the vagueness? Sometimes the terms are distinguished by ownership: Unit testing is performed by programmers, and system testing is done by application experts. OK. Well, that still doesn’t say what either one is or is not.

The truth is that unit testing and system testing are really different approaches to achieving the same goal: ensuring that a given input will produce the predicted output. Unit testing does it by making sure that individual system components perform their duties correctly. System testing ensures that no unforeseen interactions occur and that the individual tasks can actually coexist without unexpected side effects. The more complex a system and the more widespread the changes (and by definition, Y2K modifications are the most far-reaching changes you can expect to apply), the more likely it is that system test problems will occur. However, you can offset this potential problem with a stronger unit testing approach. Rigorous unit testing decreases the probability that system testing will uncover anything unexpected (assuming—perhaps unwisely—that your underlying application design is sound). With that in mind, I’ll review the available unit test techniques.

Types of Unit Tests

Modified applications are unit tested in four common ways: keyboard testing, output testing, file testing, and regression testing.

Keyboard testing, which primarily tests environmental integrity, makes sure objects exist and produce no hard halts. Keyboard tests for maintenance programs and inquiries require bringing up the first screen; for reports and batch jobs, the test involves executing the jobs to see if they run to completion. Keyboard testing requires only a single pass for current date operation; since the mission is not to look for Y2K anomalies but rather to check that objects exist and run, a second pass is overkill. Keyboard testing is in no way adequate for postconversion testing; it is normally done as part of the preconversion process to make sure that the environment is intact.

Output testing looks at reports and screens. For maintenance programs, the output test covers such tasks as adding, changing, and deleting records. When testing the output of inquiries and reports, jobs are run and checked for inclusion of appropriate records and correct sequencing. System flows are run to completion to make sure that basic application functions work, and period closes are performed and reviewed. Testing is then run through a second pass with post-2000 dates, again testing for date ranging and sequencing. The post-2000 pass especially focuses on verifying the successful inclusion of historical data (centering on functions like scheduling, account aging, availability, and expiration).

File testing, which involves viewing the contents of files, is conducted at a substantially higher cost than the tests already mentioned since whoever performs the test must possess a greater degree of understanding of actual program function. The basic functions of output testing are performed, and, in addition, each file written to or updated by a given program is checked to ensure that expanded fields are updated correctly. Since file data is the primary communication between application programs, this extra check does a far better job of ensuring that system testing will not uncover additional problems than does output testing alone.

Finally, regression testing—the Humvee of testing—operates on the principle that executing the same actions in equivalent environments must yield equivalent results. Y2K modifications provide a unique opportunity to apply this principle. Normally, modifications change the expected output of a system; we usually change a system only to alter or add function. However, for Y2K modifications, the system should, for the most part, act exactly like it did before. For instance, after a side-by-side flow test of a base environment and a century-enabled environment, the two databases should be exactly the same (except that the century-enabled database has century information added). The goal of regression testing is to prove that the environment still performs as it did prior to modification. Passing this level of testing ensures that no functionality is lost, but this assurance comes at quite a premium in terms of time.

One note about this time premium, however: A properly regression-tested system has a much higher chance of passing further system testing requirements. And while regression testing can actually be quantified up front to a certain degree, system testing (or

more importantly, system test rework) is almost impossible to budget for. System testing is the great black hole of dollars and hours at the end of the project, when resources are scarce, budgets are tight, and deadlines are looming. This exposure tends to go up exponentially with the complexity of the system. For larger systems, shifting the burden of testing toward the front can have great benefits as the project goes on.

Generating Data for Regression Tests

Regression testing requires rigorous testing procedures. The database must be in a known state and unavailable for access during the setup procedures. This is done by taking a snapshot (that is, making a secured copy) of the production data during downtime. This snapshot is now your preconversion baseline library (or libraries). The baseline is then century-enabled using whichever method you have chosen, creating a postconversion baseline. These baseline libraries (pre- and postconversion) are saved to allow refreshes and multiple system flows, depending on the complexity of tests required. For example, you might need to run an entire set of tests with a system parameter set one way, then change that parameter and run another set of tests.

Figure 1 diagrams a basic regression test. The snapshot (B0) that you take of the base production library is converted, creating C0. Both B0 and C0 are saved. At the same time, you can duplicate C0 for use in ad hoc (nonregression) testing (in the figure, that’s environment T0). Next, run a flow test on B0, labeling the result B1. You run the same flow test on C0, preferably via a keystroke record-and-playback tool, to generate C1. B1 and C1 are then compared record for record. If they match, taking into account the century information in C1, the regression test has been completed successfully. Any ad hoc user testing can be in the T0/T1 environment.

Depending on timing, rather than performing the comparison immediately, you can save B1 and C1 for later comparison (on another machine, even) and restore B0 and C0 to allow for subsequent flow tests.

I strongly recommend that you run batch jobs—especially update jobs—in a single- threaded job queue and that you do not perform interactive testing as the batch jobs run. This precaution will help you avoid sequencing problems, ensuring that those sequential counters such as order numbers and history sequence numbers will be identical. Failure to follow these guidelines can render the test results inaccurate.

Once regression testing is successfully completed, you can perform century testing much as you would in any other environment (testing date ranges, aging, sort and selection, and so on).

Comparing Data

With regression testing, you’re trying to prove that the modifications you made for the Year 2000 have no net effect on pre-2000 processing. The beauty of computer software is that if you perform the same operations on the same data, you should get the same results. The following paragraphs offer some guidelines for testing database files, spool files, and other objects.

Database Files

Testing database files—a reasonably straightforward process—can involve some twists that can cause you grief. The objective is to compare all the physical files in your database, file by file and member by member, for equality. However, equality can be a somewhat relative term. In regression testing, two classes of fields require special handling: expanded fields (fields containing years that have been expanded by your Year 2000 process) and time stamps.

If a file has neither of these field classes (for example, in V4.0.5 of System Software Associates’ BPCS for the AS/400 some 60 percent of the data files fall into this category), a simple Compare Physical File Member (CMPPFM) command can be used. Look at this real-world example: In a default installation of SSA’s BPCS enterprise resource planning (ERP) package, all files normally reside in a library called BPCSF. Assume that all the files in that library have been converted into another library called

BPCSFC. A master file with no date fields is the Bank Master File, ABK. Since ABK has no date fields, compare the pre- and postconversion files using the CMPPFM command as follows:

CMPPFM NEWFILE(BPCSFC/ABK)

NEWMBR(*ALL)

OLDFILE(BPCSF/*NEWFILE)

RPTTYPE(*SUMMARY)

OUTPUT(*PRINT)

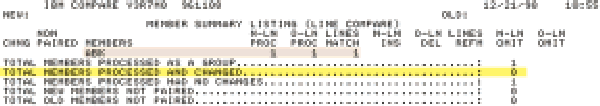

This command generates a report similar to the one in Figure 2. The figure omits the beginning and ending information and presents only the relevant information, which starts on page 2 of the printed report.

Look at the first shaded line in the figure. You will see a similar line for each member. If the first three numbers in the line (beneath the column headings N-LN PROC, O-LN PROC, and LINES MATCH) are the same, the old member and the new member match.

The second shaded line indicates that no members were changed. If this number is zero, all compared members were the same. Also worth noting, if the number in either of the last two lines for that member (Total New Members Not Paired and Total Old Members Not Paired) does not equal zero, you have a member mismatch between the files.

While Figure 2 illustrates a fairly uncomplicated case, comparing other database files might require a program to handle two steps: comparing the member list of each file, then comparing the actual members of the files. The member lists must match exactly. Some applications, such as BPCS, use workstation names when naming database file members. In this situation, be certain you use the same workstation when performing testing so that the database file member names match.

The member comparison program must take into account expanded fields and time stamps, the two exception classes mentioned earlier. A program must be written for each file that has changed. The program reads the old file and performs a field-by-field comparison with the new file. Fields of the time stamp class are ignored. For fields that have been expanded, the program should use your Year 2000 solution’s expansion rules to expand the old file field and then compare it to the new file field. If you put a little development effort in place, you should have no problem programmatically generating these comparison programs.

Spool Files

The next area of comparison is spool files. For purposes of this discussion, let’s assume you have chosen not to expand the dates on your printed reports—if you have changed your printed reports, your comparisons will be considerably more complex.

The most-practical solution is to create two output queues, OLD and NEW. As the names imply, the OLD output queue receives all printed output from the test against the old (unconverted) system. The NEW output queue receives the output from the test against the new system.

Comparing the queues involves a two-step process roughly analogous to the database comparison. First, you want to display the output queues to determine that each run generated the same spool files. Timing considerations can make this determination tricky; if a batch job takes longer in one run than in another, spooled files can appear in different orders.

Again, I strongly recommend that you run jobs in a single-threaded job queue and do not perform interactive testing concurrent with the batch jobs. This technique will ensure proper spool file sequencing. If your shop’s circumstances make it impossible to use this technique, you will have to account for potential differences in spool file order—a very important step when you perform the actual spool file comparisons.

Once you have created your spool files and identified that the number and names of files generated by the unconverted system match those generated by the converted system, you can then compare individual spool files.

Spool file comparison essentially involves using the Copy Spool File (CPYSPLF) command to copy each spool file into a database file and then comparing the results. For those reports that don’t show the time, a simple CMPPFM will suffice. However, programs that do display the time will obviously show differences. You can handle these differences proactively or reactively. Both approaches require a program to be written that will compare, line by line, the output from the CPYSPLF command.

In a proactive approach, the line number and position of the time field for each report is defined in a database file, and this information is used during the comparison to avoid mismatches. A reactive approach is more generic and a little more difficult to implement. Whenever a difference exists between two spool files, the program checks to see if the difference occurs within a time field by checking for nn:nn:nn syntax (you may need to define other syntaxes as well). Please note that this works only for time fields with the appropriate syntax. If you expand the generic routine to allow other differences (such as time fields with no delimiters), you could conceivably allow other errors to slip through.

Less reliable approaches include writing the comparison differences to a file and then printing out only records with no “:” characters or even visually inspecting the compare output to determine if the mismatches are acceptable.

Other Objects

Other objects in your system may need to be tested. If you keep system counters or lock information in data areas, you should check those data areas to make sure they are being created correctly. Unless you have a great many of these objects, you should be able to do this manually.

Exception Conditions

With all this extraordinary rigor and precision, you would think you have a completely foolproof testing mechanism. Unfortunately, that’s not quite the case. Your testing process must address certain exception conditions that arise as a by-product of the Year 2000 conversion itself.

The section of this article dealing with data generation mentioned the use of a tool to record and play back keystrokes. This tool ensures that the input into the century-enabled system consists of exactly the same keystrokes used in the base system. That could cause a problem, depending on the design of the base application.

Let’s take BPCS again as an example. Date ranges are entered in quite a few places in BPCS. Unfortunately, System Software Associates, the developers of BPCS, had a standard practice of defaulting date ranges to a lower date of 01/01/01 and an upper date of 12/31/99. This practice was a programming shortcut; both 01/01/01 and 12/31/99 are valid dates, thus making it unnecessary for the programmer to code special logic to check for 00/00/00 and 99/99/99. Unfortunately, that default date range now no longer performs as expected; in fact, it fails the simple check of “lower date greater than upper date.”

A standard Year 2000 conversion of BPCS involves changing such programs to default to 00/00/00 and 99/99/99 and to skip editing if those values are entered. When used in comparisons, 00/00/00 expands to 00/00/0000 and 99/99/99 expands to 99/99/9999, thus performing the same function as the 01/01/01 and 12/31/99 did originally. Unfortunately, no single date range will select all records in both systems; what works in one environment is invalid in the other. You can often avoid the problem by simply leaving the defaults when selecting date ranges, but whoever is designing the test scripts should be cognizant of the situation.

In some cases, certain fields allow the year 00 to indicate that no year was entered. Indeed, this type of field is often the first to cause Year 2000 failures. “No year entered” poses a special problem for regression testing as well. In database conversion, you circumvent the issue by assuming that any 00 years must signify that no year was entered

and by converting them to a valid “no year entered” value of 0000. Application processing, however, will always convert year 00 to 2000, and this value of 2000 gets written to the database for any new or updated records. If, during regression testing, you happen to write records with 00 years, you pose a difficult problem for the database comparison program: should 00 years in the base library be expanded to 0000 or 2000 for comparison? Allowing both could let a problem slip through; allowing only one or the other could generate false error conditions. These fields demand special attention.

Worthwhile for Many but Not All

Regression testing is certainly a highly reliable method of ensuring that Year 2000 modifications have not damaged existing application logic. The initial cost runs high, especially when you consider the need to develop automated testing procedures and comparison programs. The cost might appear excessive, especially given the shrinking time frame of Year 2000 conversion. However, the usefulness of regression testing extends beyond Year 2000 issues; if you ever plan to modify your system again, you can apply these same test procedures to make sure that your modifications don’t affect your core functions.

Regression testing is no silver bullet. Fundamental application design issues or time and resource constraints can lessen the effectiveness of regression testing to the point that it no longer makes sense. However, if you can avoid these pitfalls and use the Year 2000 conversion as an opportunity to implement regression testing as a part of your normal site operations, you will provide yourself with significant benefits not just now but on later projects as well.

Each shop has its own unique requirements, which is why you need as many alternatives as possible. But when it comes to testing, only you can decide whether you need a Hyundai or a Humvee.

Flow

Test

SB0 SC0

Copy

B0

C0 T0

Source

Object Files Source

Object Files Source

Object Files Source

Object Files Source

Object Files

Test

Source

Object Files

Convert

Record and Playback

Figure 1: Environments used in a regression test

Flow

Test

B1 T1

C1

SB1 SC1

|

Figure 2: CMPPFM can tell you whether your regression test is successful or not

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online