“Explainability” is the process of explaining why an ML model behaved in a certain way, such as approving or declining a bank loan.

Editor's Note: This article is excerpted from chapter 3 of Artificial Intelligence: Evolution and Revolution

In previous articles, a general look at Algorithmic Bias and Explainability was presented, next Types of Algorithmic Bias were examined. Now to turn to "Explainability."

Explainability

Customers are trying to use AI as a catalyst to reimagine their workflows, transforming how customer call centers operate, how people complete their taxes, and how legal professionals make data-privacy compliance decisions. However, many organizations still continue to struggle in deploying their AI into production environments across their existing applications.

For AI to thrive and for businesses to reap its benefits, executives need to trust their AI systems. They need capabilities to manage those systems and to detect and mitigate bias. It’s critical—oftentimes a legal imperative—that transparency is brought into AI decision-making. In the insurance industry, for example, claims adjusters may need to explain to a customer why their auto claim was rejected by an automated processing system.

It’s time to start breaking open the black box of AI to give organizations confidence in their abilities to both manage those systems and explain how decisions are being made.

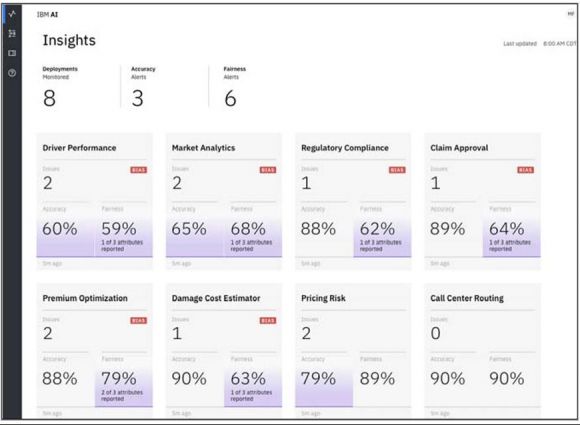

IBM recently announced its Trust and Transparency capabilities for AI on the IBM Cloud. Built with technology from IBM Research, these capabilities help provide visibility into how AI is making decisions and give recommendations on how to mitigate any potentially damaging bias. It features a visually clear dashboard that line-of-business (LOB) users can easily understand, reducing the burden of accountability for data scientists and empowering business users.

Accelerating Trusted AI Across Your Business

The goal should be to empower businesses to infuse their AI with trust and transparency, thus building confidence to deploy AI systems in production.

Trust and transparency capabilities for AI on the IBM Cloud are intended to support models built in any IDE or with popular, open-source ML frameworks. Once deployed, those models can be monitored and managed by capabilities at runtime, while the AI decisions are being made.

So, let’s say you build and deploy a system of models supporting an API your business can call whenever you need a prediction. These capabilities can hook into those models and help instrument a layer that enables organizations to capture the input the models receive and the output the models produce.

These capabilities can help provide a level of transparency, auditability, and explainability by logging every individual transaction throughout a model’s operational life. The lineage of these models is presented to business users at runtime in a way that’s easy to understand, something that’s unattainable with most of the tools available today.

Fairness is a key concern among enterprises deploying AI into apps that make decisions based on user information. The reputational damage and legal impact resulting from demonstrated bias against any group of users can be seriously detrimental to businesses. AI models are only as good as the data used to train them, and developing a representative, effective training dataset is very challenging.

In addition, even if such biases are identified during training, the model may still exhibit bias in runtime. This can result from incongruities in optimization caused by assignment of different weights to different features.

As they log each transaction, the trust and transparency capabilities feed inputs and outputs into a battery of state-of-the-art bias-checking algorithms to track bias in runtime. If bias is detected, the capabilities can help provide bias-mitigation recommendations in the form of corrective data, which can be used to incrementally retrain the model for redeployment.

The problem of bias detection can be addressed by automatically analyzing transactions within adaptable bias thresholds, while values of other attributes remain exactly the same. Traditional methods measuring bias at build time require techniques that are computationally prohibitive at runtime for a complex AI system.

These capabilities incorporate novel techniques that help automatically synthesize data in order to compute bias on a continuous basis. These techniques combine symbolic execution with local explainability for generations of effective test cases to create highly flexible, efficient, and comprehensive bias detection capabilities, as shown in Figure 3.1.

Figure 3.1: Comprehensive bias detection at run time

Explainability Enables Business Ownership

Trust and transparency capabilities can help bridge the gap between data scientists, developers, and business users within an organization, providing them visibility into what’s happening in their AI systems.

Through intuitive visual dashboards, businesses can access easy-to-understand explanations of transactions. They can simply type a transaction ID, which can be passed down from an application into the user interface, to get details about the features used to make a decision, the limits, the inputs passed, and most importantly, the confidence level of each factor contributing to the decision the AI model helped make.

Thus, even though the business-process owners might have minimal understanding of how the model works, they can still gain insight into the decision-making process and can easily compare the model’s performance against a human decision. As a result, they can make decisions about AI model health and recognize when the system might need help from data scientists.

Through these capabilities, data scientists and developers can obtain insights into near real-time performance of their models, which can also be measured and better understood by business users. This insight helps provide visibility into whether models are making biased decisions and the effect of those decisions on the enterprise. It also includes the corrective feedback data scientists can incorporate into their models to address biased behavior. IBM’s Trust and Transparency capabilities are intended to help users understand the decisions their AI models are making during runtime, something that was not previously available.

Get Started with Trusted AI

If AI is truly going to augment operational decision-making, it’s critical to make AI outcomes transparent and explainable—not only to data science teams, but also to the line-of-business user responsible for those decisions.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online