Indexes (logical files) can really speed up your SQL queries. But too many indexes can hurt AS/400 system performance in two ways. First, a large table without the proper indexes may cause a lot of sort operations or table scans that are inappropriate. Conversely, a large table with too many indexes incurs a greater maintenance penalty. Remember: Just because an index is a logical file doesn’t mean it is maintenance-free. Each time you insert, update, or delete a record to an AS/400 physical file, the index files associated with that file must eventually be maintained by your AS/400. Achieving a proper balance between required and superfluous indexes is an art.

To assist you in your artistic quest for index nirvana, I have assembled a spreadsheet that will track the use of index files in an AS/400 library. To use my spreadsheet, appropriately named “IndexSlackerTracker,” you will need Excel 97 or above, ActiveX Data Object (ADO), and an OLE DB provider or an ODBC driver (either IBM’s own products or ones from a third-party vendor). This working spreadsheet is available in Excel 97 or Excel 2000 format from the MC Web site (www.midrangecomputing.com/mc). So, go get the code and follow along. Optimize your indexing strategy the graphical way!

What’s Your Indexes’ Batting Average?

If your databases are like mine, you generate a list of indexes and find a lot of files, and you don’t have a clue as to why the indexes are there or what they are for. Contractors come and go, database administrators and programmers create temporary indexes, and trash just seems to collect on large systems. Your task is to determine which indexes are being used and which ones are just wasting valuable binary real estate...and then delete the worthless ones!

One method for identifying slackers is based on the number of accesses (reads) against each index. The AS/400 keeps internal counters associated with each index that are incremented each time the index is read. By comparing the number of accesses over time, you can create a useful metric to evaluate the appropriateness of an index.

By calling the AS/400’s Display File Description (DSPFD) command, you can access this information. However, DSPFD is, to put it in sanitized, politically correct language, interface-challenged. Fortunately, the output of this command can be redirected

into and read from a physical file, allowing you to format friendly screens and utilities with the data. In fact, the ability to redirect this information to a file is the basis of my slacker- hunting utility.

The Meat of DSPFD

If you execute DSPFD against a known index on your AS/400, you can page through the information and see a field titled Access Path Logical Reads, which displays a number. There is not much information regarding this field in the OS/400 File API’s documentation, but through experimentation, I have found that this number grows incrementally for every index record that is searched during the processing of an SQL or Query/400 request. To illustrate how the number increases, I’ll go through a simple example in which I have an employee file with an index over the last-name fields. I execute a query asking for people whose last names start with sm. Fifty records match my criteria. The index was used to find these records, so the logical-file-reads counter increases by 50. Now, I modify my query to look for SM employees but add the constraint that they also must have been hired in the last year. The query results in three records. However, the system again used the index and had to look at 50 SM records, so the counter increased by 50, not three.

Now, I’d like to illustrate how you could use this number. If you generated a report of accesses and saw a large number of hits on this index, and if you were able to correlate that fact to the query example above, you might find that adding the hire-date field to the index would reduce the number of times the index is accessed before the query search criteria are satisfied. This is, of course, a simple example and relies on the assumption that this query would be executed often enough to warrant the change to the index. The spreadsheet provided cannot make these assumptions, but it can help your brain in spotting areas for investigation.

Now that you understand how this number is stored and updated and how you can use this number, take a look at how a Microsoft Excel spreadsheet can help in the administration of your database. Operationally, the spreadsheet has two macros: GETIX and UPDIX. [Editor’s note: After downloading the IndexSlackerTracker spreadsheet, you need to create an ODBC data source name (DSN) to retrieve data from your AS/400. You also need to change the GETIX and UPDIX Visual Basic (VB) macro code to reference your AS/400, reference the name of your ODBC or OLE DB provider, and provide the correct AS/400 user name and password (if desired) to access your AS/400 data.] First, run macro GETIX by using the Tools/Macro menu item, selecting the GETIX macro, and then pressing the Run button. The macro will invoke the DSPFD command to create an output file of member information for all logical files in a given library. Through the magic of SQL, the macro retrieves the names of the files in that library, each file’s size, and the number of accesses (logical-file reads) performed against each file. The macro then formats the information into a pretty spreadsheet. At this point, save the spreadsheet and wait awhile to give your users time to do their normal activities.

Later in the day, open the spreadsheet again and run the UPDIX macro to get a new total of index accesses so that the use of the indexes can be calculated. The UPDIX macro will also execute a DSPFD command to retrieve index reads, create formulas in the spreadsheet that calculate the number of reads since the running of the GETIX macro, and create a metric for viewing the number of reads per second. The macro then displays this data side by side with the GETIX data for your inspection. Now, I’ll take the macros apart to show you how they operate.

The Illustrated GETIX

GETIX is deceptively simple. Due to space limitations, I cannot explain in detail each macro line, so I’ll just hit the important points. The macro connects to the AS/400 and prompts for a library name. After it has the name, it runs DSPFD as a stored procedure. Any AS/400 command can be called as if it were a stored procedure, even if it is not

declared as one. This process is accomplished through a call to QCMDEXC, which lives in the QSYS library. QCMDEXC takes two arguments: a string representing the command to run and the length of the string in decimal(10,5) format. The MKRUNCMD function takes the string, determines its length, and formats the call to QCMDEXC. The formatted call is then placed into the CommandText property of the command object, and the command is executed. Take a moment to look at the command the program calls:

DSPFD FILE(NEWS/*ALL) TYPE(*MBR) OUTPUT(*OUTFILE) OUTFILE(QTEMP/HOWIE) FILEATR(*LF)

This command instructs the AS/400 to generate the output file QTEMP/HOWIE, which contains all of the member information—signified by TYPE(*MBR)—for all of the logical files—signified by FILEATR(*LF)—that exist in the library NEWS. There are two reasons to generate the file into QTEMP. One is that the file will be automatically cleaned up by the AS/400 when the connection object is closed. The second is that if two people run the command at the same time, they will not stomp on each other’s files.

The next point of interest is the following SQL statement, which reads the file generated by the macro:

select mbbof, mbfile, mbisiz, mbaclr from qtemp.howie where mbbof not like ‘QADB%’

and mbisiz>0 order by 1,2

MBBOF is the member of field and represents the physical file on which this logical file is based, while MBFILE is the name of the index. MBISIZ is the size of the logical file, and MBACLR holds the number of times the file has been read. By specifying that the MBISIZ must be greater than zero, the where clause ensures that you do not read SQL views. The rest of the macro is devoted to creating the spreadsheet output.

Letting UPDIX Do the Tricks

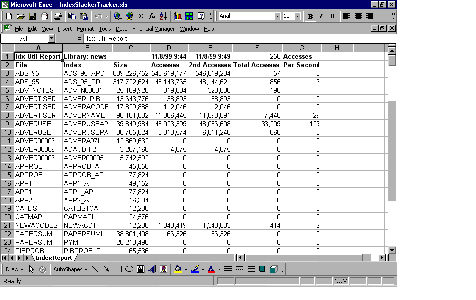

OK. As seen in Figure 1, you have generated a pretty spreadsheet for how often your logical files have been accessed. Now what? This is where UPDIX comes into play. UPDIX uses the same DSPFD and SQL statement, but in addition, it creates formulas and named cells to create a total-number-of-accesses column (Total Accesses in Figure 1, column F) and an accesses-per-second column (Accesses Per Second in Figure 1, column

G). UPDIX places a time stamp of its first and second runs in cells D1 and E1, respectively. The formula in cell F1 uses the hours, minutes, and seconds functions to calculate the number of seconds between the time stamps in cells D1 and E1; the formula names this cell (F1) TOTS. Finally, the accesses-per-second values are calculated using this formula:

(GETIX accesses - UPDIX accesses)/TOTS.

Using the Data

After you have generated the spreadsheet, look for logical files that are never accessed and logical files that have a large number of accesses. The first case indicates you may be able to delete the logical file as long as it is not performing some necessary referential constraint or being used by a system program that is not accessing the file via Query/400 or SQL. Remember, this counter is incremented only if the file access is via Query/400 or SQL, so you should ensure that a program performing native file access does not need the logical file before removing the logical file. A large number of accesses can indicate a very active index, and you may want to look at the keys used in that index. Ask yourself the following question: Can the keys be refined to help narrow the search criteria? If so, you may cut down on overall file accesses and index accesses on your system, thereby improving performance.

I have given you a useful spreadsheet that interacts with your AS/400 and produces a nice report. Use it to examine your libraries in production and batch settings at different times of the day. Some indexes may be used only by nightly batch operations, while others may be important to online transaction processing (OLTP) operations. You can improve the spreadsheet to generate graphs, or you can expand it to include more information that is available from the DSPFD command. In all you do with it, have fun rooting out those slackers!

Figure 1: This figure demonstrates the output after both macros have been executed.

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment.

More than ever, there is a demand for IT to deliver innovation. Your IBM i has been an essential part of your business operations for years. However, your organization may struggle to maintain the current system and implement new projects. The thousands of customers we've worked with and surveyed state that expectations regarding the digital footprint and vision of the company are not aligned with the current IT environment. TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now.

TRY the one package that solves all your document design and printing challenges on all your platforms. Produce bar code labels, electronic forms, ad hoc reports, and RFID tags – without programming! MarkMagic is the only document design and print solution that combines report writing, WYSIWYG label and forms design, and conditional printing in one integrated product. Make sure your data survives when catastrophe hits. Request your trial now! Request Now. Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works.

Forms of ransomware has been around for over 30 years, and with more and more organizations suffering attacks each year, it continues to endure. What has made ransomware such a durable threat and what is the best way to combat it? In order to prevent ransomware, organizations must first understand how it works. Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include:

Disaster protection is vital to every business. Yet, it often consists of patched together procedures that are prone to error. From automatic backups to data encryption to media management, Robot automates the routine (yet often complex) tasks of iSeries backup and recovery, saving you time and money and making the process safer and more reliable. Automate your backups with the Robot Backup and Recovery Solution. Key features include: Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new.

Business users want new applications now. Market and regulatory pressures require faster application updates and delivery into production. Your IBM i developers may be approaching retirement, and you see no sure way to fill their positions with experienced developers. In addition, you may be caught between maintaining your existing applications and the uncertainty of moving to something new. IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

IT managers hoping to find new IBM i talent are discovering that the pool of experienced RPG programmers and operators or administrators with intimate knowledge of the operating system and the applications that run on it is small. This begs the question: How will you manage the platform that supports such a big part of your business? This guide offers strategies and software suggestions to help you plan IT staffing and resources and smooth the transition after your AS/400 talent retires. Read on to learn:

LATEST COMMENTS

MC Press Online